[2026 Predictions Webinar] How to Build an AI-Ready Data Architecture This Year | Register Now

What Is a Real-Time Streaming Architecture?

Real-time streaming architecture is a way of designing systems so they can process and use data at the same time it is created. In this approach, information flows in continuously from different sources, such as websites, mobile apps, sensors, or transaction systems. The data is collected, processed, and delivered to other systems or users within seconds or even milliseconds.

This is different from batch processing, where data is gathered over a period of time and then processed all at once. With real-time streaming, there is no need to wait for a scheduled job. Instead, actions and insights happen immediately.

This is especially useful for situations where quick response is important, like detecting fraud in banking, recommending products to customers while they shop online, tracking deliveries as they move, or monitoring machines in a factory to prevent breakdowns.

You can learn more about the concepts and benefits in real-time data streaming.

Benefits of Real-Time Architecture

-

Low latency decision-making – Data is processed instantly, allowing organizations to respond quickly to changes or problems. For example, a fraud detection system can block suspicious transactions before they are completed.

-

Real-time analytics – Continuous data streams give an up-to-the-second view of business operations. This helps in tracking sales as they happen, monitoring system performance, or analyzing customer behavior in the moment.

-

Data enrichment and ETL modernization – Streaming systems can combine live event data with historical or external sources. This makes reports, dashboards, and machine learning models more accurate while reducing delays compared to traditional batch ETL.

Streaming Architecture Example Library

This section is a collection of real-world patterns that show how organizations use streaming systems in different industries. Each example outlines the main data sources, the flow of information, and the end results. These patterns can serve as a reference when designing your own real-time solutions, helping you understand how technologies like Kafka, Flink, and modern storage systems fit together.

-

Fraud Detection in Financial Services

Banks and payment companies handle millions of transactions every day, and some of these may be fraudulent. Real-time streaming architecture helps them spot suspicious activity within seconds. In this setup, every transaction event is sent to Kafka. Apache Flink then processes the data as it arrives and runs it through a machine learning model trained to detect unusual patterns, such as sudden large withdrawals or purchases from unexpected locations. If the model finds something unusual, an alert is sent to a fraud analyst who can review it right away, or the system can automatically block the transaction to prevent losses. This process happens in seconds, which is much faster than traditional batch processing.

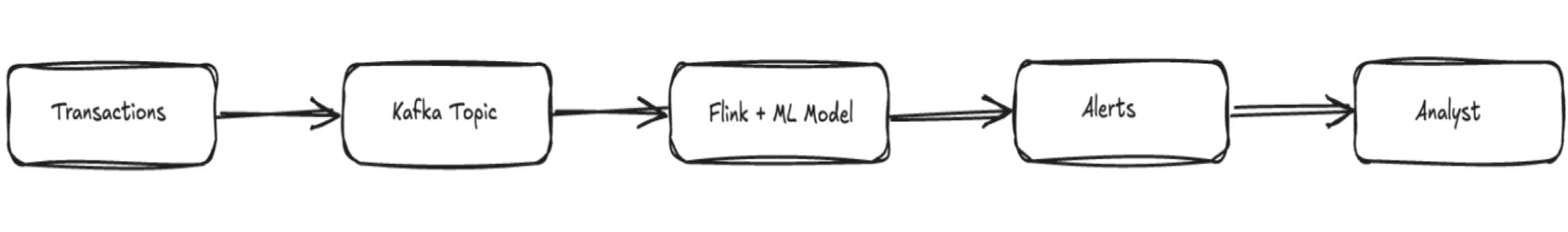

Simple architecture flow:

Workflow for detecting and managing fraudulent activities

-

Transactions – Payments, withdrawals, or transfers create event data.

-

Kafka – Collects and streams these events in real time.

-

Flink + ML Model – Checks events for unusual or risky patterns.

-

Alerts – Flags suspicious transactions immediately.

-

Analyst – Reviews alerts or lets the system block them automatically.

Main parts:

-

Kafka topics – Store live transaction events from ATMs, card payments, and online banking

-

Flink processor – Checks each transaction instantly as it arrives

-

ML model – Spots patterns that may mean fraud

-

Alert service – Sends notifications or blocks suspicious transactions automatically

Learn more about Confluent in financial services

-

Real-Time Product Recommendations

Event-driven recommendations use live user activity to suggest products or content instantly. For example, when someone views a product, adds it to a cart, or watches a video, that action is sent to Kafka. A real-time analytics model processes these events and updates the suggestions right away. This is similar to how Netflix uses real-time streaming for recommendations to keep them fresh and relevant.

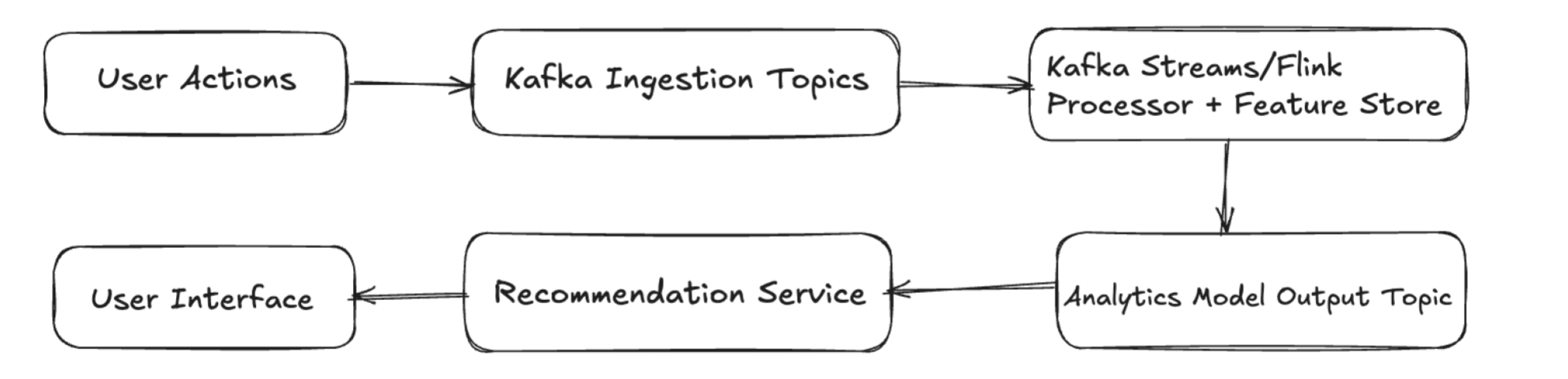

Workflow for generating real-time product recommendations

This architecture includes:

-

User Actions – Browsing, clicks, searches, and purchases generate events.

-

Kafka Ingestion Topics – Raw events are published to specific topics like product-views or cart-events.

-

Kafka Streams/Flink Processor + Feature Store – Enriches events with profile and historical data for personalization.

-

Analytics Model Output Topic – Model results are published to a Kafka topic such as recommendations.

-

Recommendation Service – Reads from the output topic and formats the data for the application.

-

User Interface – Displays updated recommendations to the customer in real time.

Main parts:

-

Kafka – Streams events like clicks, searches, and purchases.

-

Event store – Keeps recent interactions ready for quick access.

-

Analytics model – Generates personalized suggestions using real-time events and data from a feature store.

-

Serving layer – Microservice or API that sends updated recommendations to the app or website.

-

IoT Data Processing for Smart Devices

In IoT systems, smart devices like sensors, cameras, and appliances send telemetry data continuously. Real-time streaming makes it possible to process this data instantly for monitoring, analytics, and automated actions. A common setup uses edge gateways to collect device data, Kafka to transport it, and a stream processor to analyze it and trigger alerts or store it for later.

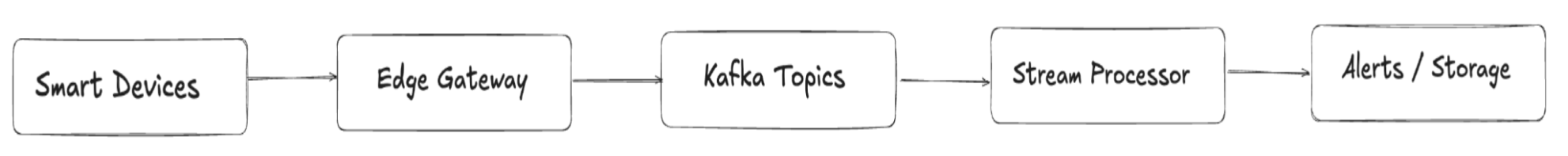

IoT data processing workflow for smart devices

This simple architecture flow features:

-

Smart Devices – Sensors or connected devices generate data such as temperature, motion, or location.

-

Edge Gateway – Aggregates device data and sends it to Kafka over a secure connection.

-

Kafka Topics – Organize telemetry streams for different device types or locations.

-

Stream Processor – Flink or Kafka Streams analyzes the incoming data in real time.

-

Alerts / Storage – Sends notifications for critical conditions and stores data for historical analysis.

Main parts:

-

Edge gateways – Connect local devices to the cloud or data center.

-

Kafka cluster – Streams telemetry data in real time and stores it temporarily.

-

Stream processing engine – Runs analytics and detection logic on incoming events.

-

Alerting system – Sends immediate notifications for abnormal readings.

-

Storage layer – Keeps historical telemetry for reports, trends, and compliance.

Latency target: Often under 1 second from event generation to alert delivery.

For more examples, read “Stream Processing with IoT Data: Challenges, Best Practices, and Techniques.”

-

Real-Time Analytics Dashboard

Real-time dashboards allow teams to monitor key metrics as they happen, instead of waiting for delayed reports. A typical setup streams events into Kafka, processes them with a stream processor, and stores results in materialized views. These views are then used by visualization tools like Grafana or Tableau to present live charts and metrics.

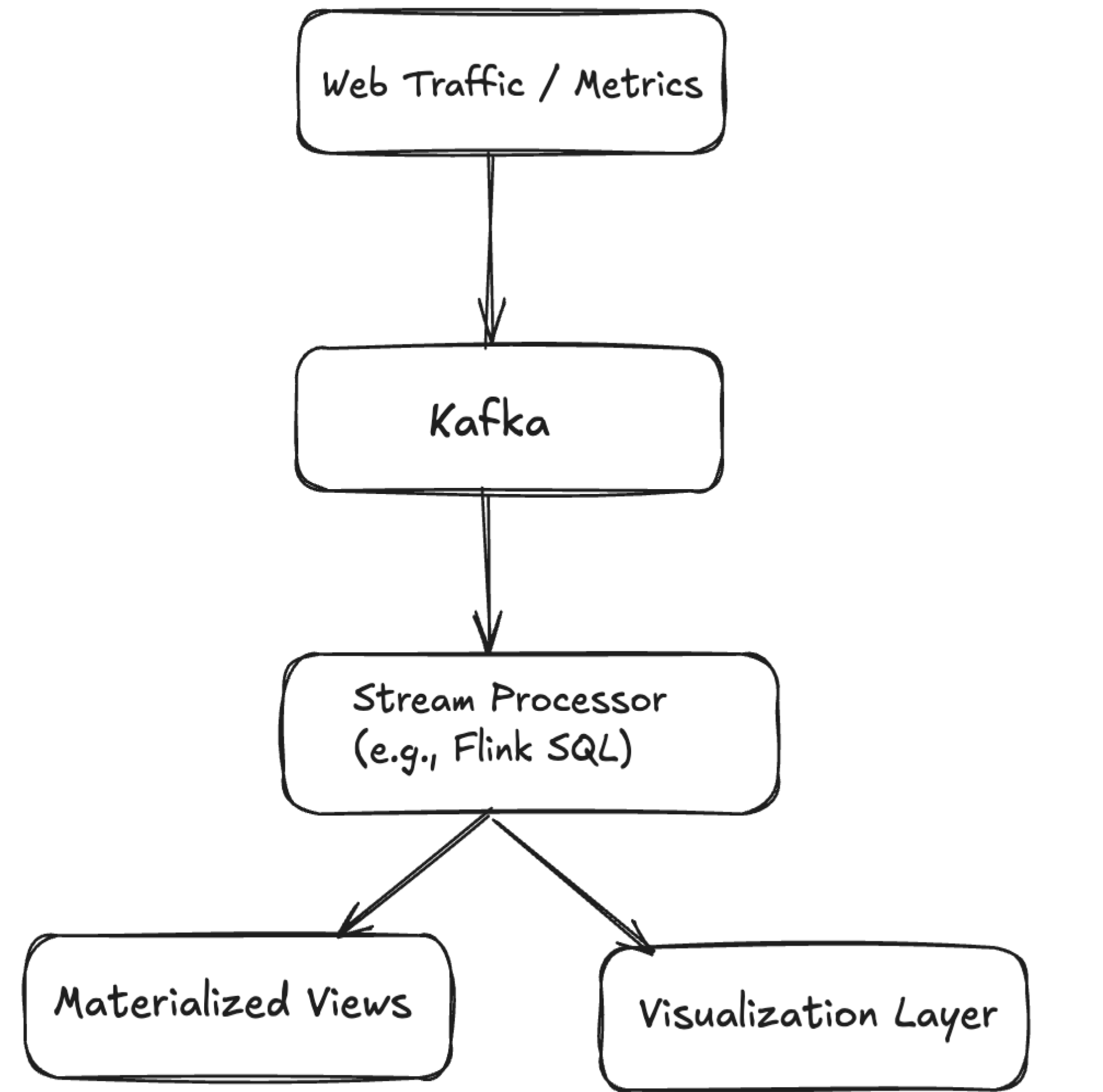

Workflow for visualization of real-time data analytics

Here's how this works:

-

Event Ingestion with Kafka – All raw events (such as website clicks, orders, or sensor readings) are collected and sent into Kafka topics in real time.

-

Stream Processing – A stream processor (for example, Flink or ksqlDB) reads these Kafka topics. It aggregates, filters, or enriches the data to prepare useful metrics like “orders per minute” or “active users right now.”

-

Materialized Views – The processed results are stored in materialized views (special tables that keep running totals or summaries). This makes querying very fast because the heavy calculations are already done.

-

Visualization Layer – Dashboards like Grafana, Tableau, or custom web apps read from these views and show charts and graphs that update continuously without manual refresh.

Example Use Case:

- A company like Vimeo or OutSystems wants to track how many users are on their website every second. Kafka collects click events, Flink aggregates them, and the dashboard displays a live graph of active sessions.

Alternative option:

Change data capture (CDC) from databases can also be streamed into Kafka, then aggregated with Flink SQL for up-to-the-minute dashboards.

Main parts:

-

Kafka – Ingests continuous events such as page views, clicks, or application metrics.

-

Stream processor – Flink or Kafka Streams calculates metrics and aggregations in real time.

-

Materialized views – Store aggregated results for quick queries (e.g., number of users online, average response time).

-

Visualization layer – Dashboards built in Grafana, Tableau, or custom web apps display the live data.

Business use cases:

-

Monitoring website traffic in real time

-

Tracking system performance and errors

-

Live business KPIs such as orders per minute

Learn more about building real-time CDC pipelines.

-

Supply Chain Event Tracking

In supply chains, every movement of goods creates an event: items are packed, shipped, delayed, or delivered. Real-time streaming makes it possible to track these events instantly, providing full visibility into inventory and shipments. A common approach uses Kafka topics to represent lifecycle events such as “order created,” “package shipped,” or “delivered.” These streams are then enriched and synchronized with ERP or Order Management Systems (OMS). In other words, event sourcing + streaming = real-time supply chain visibility.

Supply chain event monitoring and tracking workflow

What does this workflow look like in the real world?

-

Warehouse / Logistics Systems – Generate events whenever an order is packed, shipped, delayed, or delivered.

-

Kafka Topics – Capture these lifecycle events in real time (e.g., orders, shipments, deliveries).

-

Stream Processor – Flink or Kafka Streams enriches the events with related data like inventory levels, customer details, or expected delivery times.

-

Enriched Views – Build materialized states that combine raw events into supply chain timelines or order histories.

-

ERP / OMS / Dashboard – Updates enterprise systems and dashboards with the latest shipment and inventory status for users to monitor.

Main parts:

-

Kafka topics – Store and stream lifecycle events.

-

Stream processor – Runs enrichment and joins with other datasets.

-

Enriched views – Provide structured state for downstream systems.

-

ERP / OMS sync – Keeps core business systems updated.

-

Dashboard – Gives real-time visibility to operations teams.

Business use cases:

-

Live shipment tracking across regions.

-

Real-time inventory monitoring to avoid stockouts.

-

Faster ERP and OMS updates for order status.

Comparing Streaming vs Traditional Architectures

When designing data systems, it is helpful to contrast streaming vs batch architecture. Traditional approaches often rely on batch jobs and request/response models, while streaming architectures process continuous event flows for near-instant insights.

|

Aspect |

Traditional (Batch / Request-Response) |

Streaming (Event-Driven) |

|

Data movement |

Retail sales data is collected all day and processed in a nightly job |

Each online purchase is processed instantly as the customer checks out |

|

ETL model |

Bank processes transactions in a batch at the end of the day for reconciliation |

Bank flags a suspicious transaction the moment it happens |

|

Interaction model |

Website analytics refreshed once per day in a dashboard |

Dashboard shows number of active users in real time |

|

State updates |

Inventory updated every 24 hours in ERP system |

Inventory updated immediately when an item is sold online |

|

Use cases |

Payroll processing, end-of-month financial reports, historical trend analysis |

Fraud detection, ride-sharing apps tracking drivers, real-time product recommendations |

Common Streaming Design Patterns

When building real-time systems, some design patterns are used again and again. These patterns make it easier to solve common problems. Here are the most important ones:

-

Change Data Capture (CDC)

This pattern streams every change in a database (new rows, updates, deletes) into Kafka. For example, when a customer updates their address, the change is sent in real time to other systems so all apps stay in sync. -

Log Compaction

Kafka can keep only the latest value for each key in a topic. This is useful when you only care about the current state. For example, storing the latest balance for each bank account instead of keeping every single transaction. -

Materialized Views

A materialized view is like a ready-to-use table built from a stream of events. It stores computed results so dashboards or apps can query them very quickly. For example, keeping the running total of website visitors in the last 5 minutes. -

Fan-out

With fan-out, one Kafka topic can send data to many different consumers at the same time. For example, the same stream of click events could be used for personalization, fraud detection, and analytics dashboards. -

Stream-Table Join

This pattern joins live events (the stream) with existing static data (the table). For example, joining a stream of transactions with a table of customer profiles to check if a transaction matches the user’s country and spending limit. -

Dead Letter Queue (DLQ)

Sometimes events fail to process correctly. Instead of losing them, they are sent to a special topic called a dead letter queue. Later, developers can check these events, fix the errors, and reprocess them.

Ready to Build Your Own Streaming Architectures?

Real-time streaming architecture lets businesses see and use data as it happens. Instead of waiting for daily or weekly reports, companies can respond immediately to events.

With tools like Kafka and Flink, teams can detect fraud, recommend products, track devices, monitor websites, and manage supply chains quickly. Common patterns such as CDC, materialized views, fan-out, and stream-table joins make building these systems easier and more reliable.

Compared to traditional batch systems, streaming provides continuous updates and instant insights. Understanding these patterns helps teams build fast, efficient and smart real-time applications. Ready to start building your next streaming architecture?