[2026 Predictions Webinar] How to Build an AI-Ready Data Architecture This Year | Register Now

Apache Kafka® Security Vulnerabilities You Need to Know

Apache Kafka® can be a powerful and secure technology for modern applications. However, its built-in encryption and authorization features don’t mean Kafka applications are secure out of the box. Security in Kafka must be intentionally configured. Failing to do so can lead to significant costs in terms of time, resources, downtime, and finances. This article will cover common Kafka vulnerabilities, the associated risks, how to secure your Kafka clusters, and how you can get started.

Common Kafka Security Vulnerabilities

Securing a Kafka cluster isn't a single action but a multi-layered strategy. It involves protecting data as it moves, controlling who can access it, and securing the underlying infrastructure that manages the cluster. Understanding the core concepts and common vulnerabilities is the first step toward building a robust and resilient event-streaming platform.

To understand Kafka security, it's important to be familiar with the following list of 10+ common Kafka security vulnerabilities.

Encryption in Transit

Transport Layer Security (TLS) is a cryptographic protocol that provides secure communication over a network. In Kafka, its primary role is encryption in transit.

When TLS is enabled, all data sent between your applications (clients) and the Kafka brokers is encrypted. This means if an attacker were to "sniff" the network traffic, they would only see unintelligible ciphertext, not the actual plaintext data (like user information, financial transactions, or other sensitive content).

This process relies on digital certificates. When a client connects to a broker, the broker presents its certificate. The client verifies this certificate against its own list of trusted certificates (its "truststore") to ensure it's connecting to a legitimate broker and not an imposter in a man-in-the-middle attack.

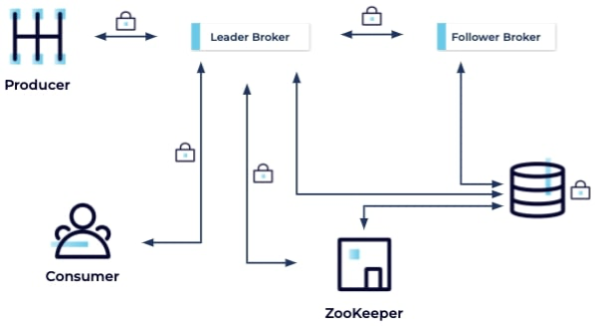

Encryption applied to messages while in transit

TLS/SSL Authentication

While encryption protects the data itself, authentication is about verifying identity. It answers the question, "Am I talking to who I think I'm talking to?" TLS can be used for both server and client authentication.

One-Way (Server) Authentication: This is the most common configuration. The client authenticates the server (as described in the handshake process above) to ensure it's connecting to the correct Kafka cluster. The server, however, does not authenticate the client. Any client that trusts the server's certificate can connect.

Two-Way (Mutual) Authentication (mTLS): For higher security, you can configure mutual TLS. In this setup, the authentication process is reciprocal:

-

The client authenticates the server's certificate.

-

The server then requests a certificate from the client.

-

The client presents its own certificate, which the server validates against its truststore.

With mTLS, the broker not only proves its identity to the client but also verifies the client's identity before allowing a connection. This ensures that only pre-approved clients (those with a valid, trusted certificate) can even attempt to connect to the cluster.

Flow of mutual TLS (mTLS) authentication between client and server

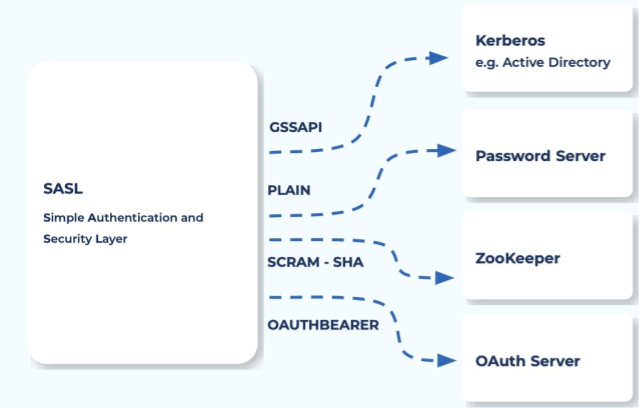

SASL (Simple Authentication and Security Layer)

SASL is a framework that decouples authentication mechanisms from application protocols. In Kafka, its purpose is to verify the identity of a user or application connecting to the cluster. While TLS authenticates the machine (the client or server), SASL authenticates the principal (the user or service account) running on that machine. TLS/mTLS is like the security guard at the building entrance checking your government-issued ID. SASL is like the receptionist inside asking for your name and appointment details to verify who you are and why you're there.

Different types of SASL implementation

Kafka supports several SASL mechanisms, each suited for different use cases:

-

SASL/PLAIN: A simple username and password mechanism. It's easy to set up but sends credentials in plaintext. It must always be used with TLS encryption to prevent passwords from being exposed on the network.

-

SASL/SCRAM (Salted Challenge Response Authentication Mechanism): A more secure username/password method. It avoids sending passwords over the wire by using a challenge-response handshake, making it resistant to eavesdropping even without TLS.

-

SASL/GSSAPI (Kerberos): A highly secure, ticket-based authentication protocol often used in large corporate environments. It allows for single sign-on (SSO) and is considered a gold standard for enterprise security.

-

SASL/OAUTHBEARER: Uses OAuth 2.0 access tokens for authentication. This is ideal for modern, cloud-native environments and microservices where applications are often authenticated using short-lived security tokens.

No Authentication Enabled

This is the most basic and critical vulnerability. It means the Kafka cluster is operating in an "open door" mode, where it does not verify the identity of connecting clients.

Any application or user that can reach the Kafka brokers over the network can connect without providing any credentials. The cluster essentially trusts everyone implicitly.

This creates a free-for-all environment.

-

Unauthorized Data Access: Malicious actors can connect and read (consume) data from any topic, leading to data theft and privacy breaches.

-

Data Corruption/Injection: Attackers can write (produce) malicious or nonsensical data to topics, corrupting datasets, triggering incorrect business logic, and poisoning analytics.

-

Denial of Service (DoS): An attacker can easily overwhelm the cluster by creating thousands of topics or partitions, or by flooding the brokers with traffic, causing a service outage for legitimate applications.

It’s like leaving your office building completely unlocked. Anyone can walk in, read confidential documents, shred files, or just cause chaos.

Unencrypted Data in Transit (No TLS)

When TLS is not configured, all data exchanged between clients (producers/consumers) and Kafka brokers travels across the network as plaintext.

The data is sent "in the clear," exactly as it is, without any form of encryption.

-

Eavesdropping ("Sniffing"): Anyone on the network path between the client and the broker can use network monitoring tools (like Wireshark) to capture and read the traffic. This is extremely dangerous for sensitive data like personal user information (PII), financial transactions, or proprietary business data.

-

Man-in-the-Middle (MITM) Attacks: An attacker can go beyond just listening. They can intercept the communication, alter the data in transit, and then forward it to the intended recipient. For example, they could change the amount in a financial transaction message from $100 to $10,000 without either the producer or consumer knowing.

This is the equivalent of sending sensitive information on a postcard. The mail carrier, and anyone else who handles it, can read the entire message.

Weak or Misconfigured Access Controls

Poorly defined ACLs can give users excessive permissions, increasing the risk of accidental or malicious data modification or deletion. Authenticated users are given far more permissions than their roles require. This often happens through the overuse of wildcards (*) or by assigning admin-level privileges to regular applications.

-

Privilege Escalation: An attacker who compromises a low-level application can leverage its excessive permissions to read sensitive data or alter critical topics.

-

Accidental Damage: A well-intentioned developer or a buggy application with broad DELETE or ALTER permissions could accidentally delete critical topics or change configurations, leading to data loss or outages.

-

Insider Threats: A malicious employee can exploit their overly generous permissions to access or exfiltrate data they shouldn't be able to see.

It's like giving every employee a master key that opens every door in the company building, including the server room and the CEO's office, when they only need access to their own workspace.

Insecure ZooKeeper Configuration

The control plane (managed by ZooKeeper in older versions or internal KRaft controllers in modern Kafka) stores the cluster's most critical metadata—broker IPs, topic configurations, ACLs, etc. If it's not secured, the entire cluster is at risk.

The ports for ZooKeeper or the KRaft controllers are exposed, and there's no authentication or encryption required to connect to them.

-

Full Cluster Takeover: An attacker with access to an unsecured control plane can essentially become the cluster administrator. They can modify ACLs to grant themselves full access, delete topics, reassign partitions, and bring the entire Kafka cluster down.

-

Metadata Leakage: The configuration data provides a complete map of the cluster, which an attacker can use to plan a more sophisticated attack.

This is like leaving the blueprints, security system codes, and master keys for a bank vault in an unlocked box on the sidewalk.

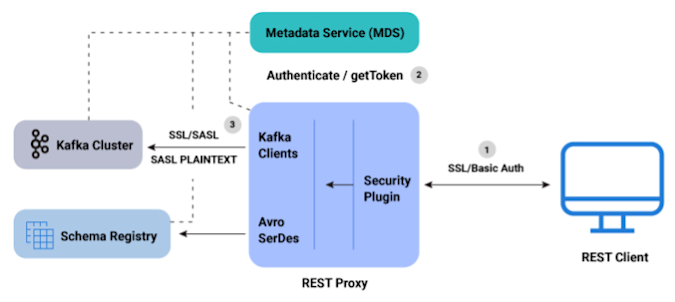

Open REST Interfaces

Components like the Kafka REST Proxy, Kafka Connect, and ksqlDB provide convenient HTTP/REST APIs for interacting with Kafka. If these web-facing interfaces aren't secured, they become a major entry point for attackers. Learn more about the kafka rest proxy.

Audit Logging Not Enabled

Audit logs are the authoritative record of security-relevant events occurring within the cluster, such as authentication successes/failures and authorized/denied actions. The Kafka brokers are not configured to log who is attempting to do what, and when. It’s like having a high-tech security system with alarms and locks but disabling the surveillance cameras and event logs. When a break-in happens, you know you've been compromised, but you have no way of knowing how or by whom.

To master how to prevent these vulnerabilities, you can explore courses on Kafka Authorization.

Improper Key/Truststore Management

Keys are secret pieces of information (like a password) used to encrypt and decrypt data, while a truststore is a secure repository of certificates from trusted parties (Certificate Authorities) used to verify identities.

Improper management includes practices like:

-

Hardcoding keys or credentials directly into application source code.

-

Using weak or default keys that are easily guessable.

-

Failing to rotate keys regularly.

-

Granting excessive permissions to keys.

The primary risk is unauthorized access. If an attacker gains access to these keys, they can decrypt sensitive data, impersonate legitimate users or services, and compromise the entire system's integrity. It's like leaving the master key to your entire building under the doormat.

Exposure to Replay Attacks

It is a type of network attack where a malicious actor intercepts a valid data transmission (like a login request) and fraudulently re-transmits it to impersonate the original sender and gain unauthorized access or cause a duplicate operation.

Imagine someone records the sound of you unlocking a voice-activated door and then simply plays back the recording to open it themselves. That's essentially a replay attack. The system is fooled because the replayed message is technically valid and authentic; it just wasn't sent by the legitimate user at that time.

This is particularly dangerous for financial transactions or authentication processes. To prevent this, systems use countermeasures like:

-

Nonces (Numbers used once): A unique, random number included in each message that the server tracks, making it impossible to reuse an old message.

-

Timestamps: Messages are only valid for a very short period.

-

Session Tokens: A unique token is generated for each session and invalidated upon logout.

Lack of Network Segmentation

A lack of network segmentation refers to a "flat" network architecture where all devices (computers, servers, printers) are connected in one large network with no internal divisions. This means once an attacker gains a foothold anywhere on the network, they can easily move laterally to access and compromise other critical systems.

Think of a submarine with no separate, watertight compartments. A single leak would flood the entire vessel. Network segmentation, on the other hand, divides the network into smaller, isolated sub-networks (segments) using firewalls and Virtual LANs (VLANs). If one segment is breached, the damage is contained, preventing the attacker from reaching sensitive areas like a database of customer information or financial records.

This strategy is a core principle of a Zero Trust security model, where no device is trusted by default, and access is strictly controlled between segments. It significantly reduces the potential impact of a security breach.

Why Kafka Security Vulnerabilities Matter

For use cases like fraud detection, SIEM optimization, and event-driven microservices, any security breach can have devastating consequences, such as compliance risk, data leaks, downtime, and unauthorized access.

The types of data likely to be exposed via Kafka's most common security vulnerabilities include personally identifiable information (PII), financial records, and other sensitive customer data. This is particularly relevant for data pipelines involving AI systems and large language model (LLM), as they often rely on large datasets of customer information. A managed service like Confluent Cloud can help mitigate these risks by providing robust, built-in security controls. You can learn more about this in our post on Confluent Cloud security controls.

How to Secure Kafka

Securing an Apache Kafka cluster is crucial for protecting your data streams. This section breaks down the most common security vulnerabilities you're likely to encounter in a Kafka deployment. For each vulnerability, we'll outline the core mitigation strategy you can implement in open-source Kafka and compare it with the enhanced or simplified security features offered by the Confluent Platform. This will help you understand the essential steps to harden your Kafka environment and see how Confluent can streamline that process.

|

Common Kafka Vulnerability |

Core Mitigation |

Kafka vs. Confluent |

|

No Authentication Enabled |

Use SASL Authentication (SCRAM, Kerberos, OAuth) |

Confluent provides simplified and enhanced authentication mechanisms. |

|

Unencrypted Data in Transit |

Enable TLS for Encryption |

Confluent Cloud enables TLS by default. |

|

Weak or Misconfigured Access Controls |

Apply RBAC or Fine-Grained ACLs |

Confluent offers Role-Based Access Control (RBAC) for more granular permissions. |

|

Insecure ZooKeeper Configuration |

Harden ZooKeeper with SASL and TLS |

Confluent has moved to a self-managing metadata layer with KRaft, eliminating the need for ZooKeeper. |

|

Open REST Interfaces |

Secure Kafka Connect and REST Proxy |

Confluent provides secure REST interfaces with built-in authentication and authorization. |

|

Audit Logging Not Enabled |

Enable Audit Logging |

Confluent offers comprehensive audit logs for enhanced security monitoring. |

|

Improper Key/Truststore Management |

Rotate and Manage Secrets Properly |

Confluent Cloud manages secrets and keys for you. |

|

Lack of Network Segmentation |

Use Private Networking (VPC Peering, PrivateLink) |

Confluent Cloud supports private networking to isolate your Kafka clusters. |

For a deeper dive into securing Kafka, you can explore courses on Kafka authentication.

Kafka Security Checklist

Building secure, enterprise-grade data streaming pipelines with Apache Kafka requires a multi-layered security strategy. The following checklist outlines essential best practices to implement from day one. Following these steps will help you protect your data, control access, and maintain a strong security posture for your Kafka clusters.

-

TLS enabled for all brokers and clients: Encrypting data in transit with TLS prevents eavesdropping and man-in-the-middle attacks. This should be considered a non-negotiable baseline for any production environment.

-

Authentication protocols in place (SASL/OAuth): Ensure that every client, user, and broker connecting to your cluster is properly authenticated. This verifies their identity before allowing any interaction.

-

ACLs configured for all users/topics: Use access control lists (ACLs) to enforce the principle of least privilege. Each client should only have the specific permissions (Read, Write, Create) they need for the topics they must access.

-

Connect and REST interfaces secured- components like Kafka Connect and the REST Proxy are powerful entry points into your cluster. They must be secured using the same principles of encryption, authentication, and authorization.

-

Audit logs enabled: Maintain a detailed record of who is accessing your cluster, what operations they are performing, and whether those requests succeed or fail. Audit logs logs are critical for security monitoring and incident response.

-

ZooKeeper hardened (if applicable): For clusters still using ZooKeeper, it's vital to secure it by enabling authentication and encryption. Note that modern Kafka versions are moving to a self-managed metadata quorum (KRaft mode) to eliminate this dependency.

-

No public-facing brokers: Brokers should never be exposed directly to the public internet. Doing so creates a massive attack surface. Always place your cluster within a private network.

-

Private networking used: Isolate your Kafka cluster from public networks using technologies like VPC Peering or AWS PrivateLink. This ensures that traffic to and from your brokers is never routed over the open internet.

Securing Your Kafka Deployment With Confluent

Before diving into Confluent's enhancements, it's important to understand the security foundation provided by open-source Apache Kafka. Securing a Kafka cluster fundamentally involves configuring three key areas:

-

Encryption (TLS): Encrypting data in transit to prevent eavesdropping and data tampering as it moves between clients and brokers.

-

Authentication (SASL/mTLS): Verifying the identity of clients and brokers to ensure only legitimate applications and servers can connect to each other.

-

Authorization (ACLs): Defining what an authenticated user is allowed to do (e.g., read from Topic A, write to Topic B).

Setting these up correctly is the essential first step. However, managing these configurations, especially at scale, can be complex and error-prone. This is where a platform like Confluent provides significant value.

How Confluent Improves Kafka Security

Confluent, founded by the original creators of Kafka, provides a data streaming platform built around Kafka that is hardened and enhanced for enterprise use. Their deep expertise, backed by over 5 million engineering and support hours, is reflected in a platform designed to meet the strict security and compliance needs of more than 5,000 customers, many in highly regulated industries like finance and healthcare.

Here’s how Confluent improves upon standard Kafka security:

Role-Based Access Control (RBAC)

While Kafka's Access Control Lists (ACLs) are powerful, they can become incredibly complex to manage in a large organization. You might need to define hundreds or thousands of granular rules for individual users and topics, a condition known as "ACL sprawl."

RBAC is a more intuitive and scalable approach to authorization. Instead of assigning permissions one-by-one, you define roles (e.g., Developer, DataAnalyst, SecurityAdmin) and assign a set of permissions to each role. You then simply assign users or applications to a role.

This is far easier to manage. To grant a new analyst access, you just assign them the DataAnalyst role. To revoke access, you remove them from the role. This model drastically reduces administrative overhead and the risk of misconfiguration compared to managing individual ACLs.

Comprehensive Audit Logs

Detecting and investigating security incidents requires a detailed record of activity. While you can configure some logging in open-source Kafka, Confluent provides a comprehensive, out-of-the-box audit logging system designed for security and compliance.

Confluent's audit logs capture a detailed, immutable trail of security-critical events across the platform.

These logs are not just simple server logs; they are structured security events that answer key questions:

-

The user or principal that performed the action.

-

The operation that was attempted (e.g., produce, consume, create_topic, update_role).

-

The timestamp of the event.

-

Was the attempt successful (Allowed) or unsuccessful (Denied)? This level of detail is crucial for security forensics, identifying anomalous behavior (like repeated failed login attempts), and proving compliance with regulations like SOC 2, HIPAA, or PCI DSS.

Managed Security and Holistic Protection in the Cloud

Confluent Cloud, as a fully managed service, removes a significant portion of the security burden from your team. Security isn't just an add-on; it's built into the fabric of the platform.

-

Built-in Encryption: All data in Confluent Cloud is encrypted in transit with TLS by default. Data is also encrypted at rest, protecting it on the disk. This is a baseline security measure that you would otherwise have to configure and manage yourself.

-

Secure Networking: Confluent Cloud allows you to connect your private network environments (VPCs) directly and securely to the service using technologies like AWS PrivateLink or VPC Peering, ensuring your data never traverses the public internet.

-

Secrets Management: The platform provides secure storage for sensitive information like API keys and passwords, integrating with external secrets management tools.

-

Compliance and Certifications: Confluent Cloud maintains a suite of industry-standard security certifications (e.g., SOC 2, ISO 27001, PCI DSS, HIPAA), which means the platform's security controls have been independently audited and verified. This saves organizations a tremendous amount of time and effort in their own compliance audits.

In summary, Confluent takes the robust security primitives of Apache Kafka and builds an enterprise-grade security ecosystem around them, simplifying management, enhancing visibility with audit logs, and providing a holistic, secure platform that is ready for the most demanding enterprise workloads.

Confluent Cloud provides built-in security features like Role-Based Access Control (RBAC), which you can learn more about in the Confluent Cloud RBAC Overview. It also offers comprehensive Confluent audit logs and a detailed Confluent Cloud security overview.

Kafka Security FAQs

Is Apache Kafka secure by default?

No, Apache Kafka is not secure by default. You need to configure security features like encryption and authentication intentionally.

How do I encrypt Kafka traffic?

You can encrypt Kafka traffic by enabling TLS for all communication between brokers and clients.

What are the most common Kafka misconfigurations?

The most common misconfigurations include disabled authentication, unencrypted data in transit, and weak access controls.

Can Kafka be made HIPAA or GDPR compliant?

Yes, with the right security configurations, such as encryption, access controls, and audit logging, Kafka can be made compliant with regulations like HIPAA and GDPR.

How do I secure ZooKeeper in a Kafka deployment?

You can secure ZooKeeper by enabling SASL authentication and TLS encryption for all communication. However, newer versions of Kafka are moving away from ZooKeeper in favor of KRaft.