[2026 Predictions Webinar] How to Build an AI-Ready Data Architecture This Year | Register Now

The PipelineDB Team Joins Confluent

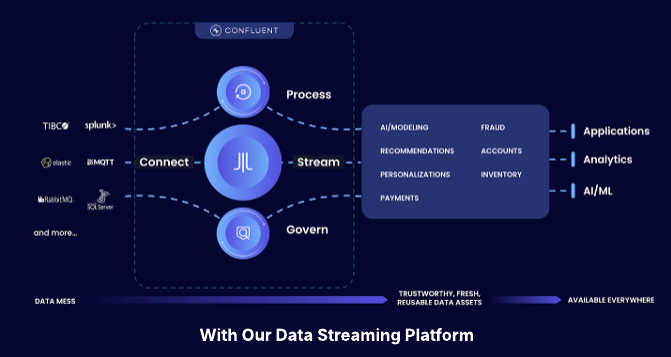

Some years ago, when I was at LinkedIn, I didn’t really know what Apache Kafka® would become but had an inkling that the next generation of applications would not be islands disconnected from one another, or lashed together with irregular, point-to-point bindings. When we founded Confluent, we took the radical approach of viewing data—and the infrastructure that supported it—as a series of real-time streaming events rather than something kept in static, sedentary data repositories. In the process, we created a new way for developers to think about creating modern applications that lean on a backbone of data in flight.

In four short years, Confluent has grown from an idea into an industry pioneer. And today, event streaming is changing the trajectory and velocity of business with more than 60 percent of the Fortune 100 rearchitecting their core data infrastructure around an event streaming platform. The catalyst behind this movement wasn’t necessarily just the technology but rather a community of developers who imagined what was possible and adopted this paradigm shift with us.

Our belief is that as companies collect their data in an event streaming platform—one that integrates real-time data across different parts of the company—it is only natural that they also need to process the event streams, join and summarize them on the fly. Whether you’re building a fraud detection system or a ride-sharing system, modern applications require data to be joined and summarized to serve their individual needs and have the summarized views continuously updated as new events are generated. That was the reason we created KSQL which has now become one of the most popular tools in the Kafka ecosystem.

We continue to challenge ourselves to help developers expand what is possible and create more value with Confluent. To that end, we’re announcing that the PipelineDB team will be joining Confluent. As you may know, PipelineDB has piqued the attention of many and shown what is possible with a unique take on integrating streaming datasets into Postgres—one of the world’s most popular data-at-rest technologies that underpin the systems built by many of our customers as well as the industry at large. The underlying approach of continuously updated views on streaming data matched our vision of how developers build summarizations and joins on event streams with KSQL. Instead of throwing data to yet another database for it to sit idle—waiting to be queried—why not explore how that approach could be applied to event streams in Kafka? And that’s when both teams got serious about working together.

The PipelineDB team brings with them a vast wealth of experience, spanning both databases and stream processing. They also share our vision of a future powered by event streaming, and I very much look forward to seeing the result of their contributions as we complete the event streaming platform journey we started all those years ago.

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

IBM to Acquire Confluent

We are excited to announce that Confluent has entered into a definitive agreement to be acquired by IBM.